In this lab, we conducted a distance azimuth survey of points on the UW-Eau Claire campus mall. This method of sampling uses distances and azimuths to determine the locations of objects relative to a control point(s). We used a laser distance finder to collect the data, which is a simple piece of equipment that can work in many conditions. The collected data was then used to create a map of the features on the UW-Eau Claire campus mall.

Geographic Setting

Before starting the exercise, our professor took us behind Phillips Hall to test the laser finder. This location was chosen because it had many features around (cars, trees, signs), had a recognizable location for a static control point, and was right outside our classroom.

Our group chose to survey the UW-Eau Claire campus mall to the north of the Davies Student Center (Figure 1). We recorded the distance and azimuth of multiple objects existing in the courtyard, including stone sitting blocks, lamp posts, trees, and signs. The area is the old floodplain of the Chippewa River and has only slight elevational changes. We chose this area because it was on-campus, was free from tall or large obstructions that might interfere with our equipment, and it had multiple points that could be used for control points. We also chose the area because it had many objects we could map, which we needed 100.

|

| Figure 1: An aerial image of the area came from the Eau Claire County geospatial dataset. The 3 inch imagery (2709_29NW) came from the city of Eau Claire. |

Methods:

The first step of the survey before going into the field was to establish control points from where we would collect the rest of our data. Since this data was indirect (we were not collecting GPS coordinates for every point), we needed specific points in our survey that would be easy to identify on a basemap, such as Google Earth. We could then use the coordinates of these locations from Google Earth to determine the relative locations of the other data points. Two control points were established (Figure 2).

|

| Figure 2: The green dots at the center and north east portion of the picture show where the control points were established. |

Equipment List:

- TruPulse 200 laser distance finder

- Compass

- Notebook and pencil

Potential problems that past students experienced and possible solutions:

- Objects were too small to be surveyed

- Survey objects within 100m

- Data was not stored in the same coordinate system as the map layer

- Establish appropriate coordinate system for map layer and data right away

- Control point coordinates were not accurate enough

- Examine multiple basemaps (Google Earth, ESRI basemaps, aerial imagery) to determine most accurate coordinates

- Forgot which features were surveyed

- Determine an efficient surveying method (left to right, near to far)

- Accidentally aimed at the object behind the targeted feature

- Take multiple readings of the same location to make sure

We collected both azimuth and distance data with the TruPulse 200, a laser distance finder (Figure 3). The finder calculates distance using the time it takes for the laser emitted by the finder to reflect off an object and return to the device (Laser Technology Inc, 2015a). The finder also calculates azimuth by calculating the arc distance between a fixed point (true north) and the vertical circle passing through the center of an object. The azimuth is measured clockwise from true north to 360°. (Laser Technology Inc, 2015b). Before collecting data, we compared true north on the TruPulse 200 to north on a regular compass. The true north of the finder and compass relatively matched, which was important to ensuring data integrity.

The laser distance finder was beneficial for our survey because it collected relatively accurate measurements of our objects. We did not need extremely accurate data, so the finder worked well. One weakness of this measurement tool was that it was tiring to collect data for long periods of time with the finder. It was hard to hold up the device and keep squeezing the trigger for 100 data points. Scrolling through the different screens on the device was also tiring because we had to keep pressing a button over and over. Another weakness of the device was that it was sometimes hard to aim the small cross hair of the finder on an object far away. This could lead to distance measurement errors.

|

| Figure 3: Our group used a TruPulse 200 laser distance finder to collect bearings (azimuth) and distance readings for each feature (http://www.geo-spektr.ru/product_13123.html). |

When working with the laser distance finder, we had to be aware that the data could be influenced by magnetic declination. Magnetic declination is the variation between the horizontal plane between magnetic north (the direction a compass points towards due to the Earth's magnetic field) and true north (north according meridian lines pointing towards the north pole). It varies by geographic location and over time. To find the true bearing of your location you add magnetic bearing to the magnetic declination, as shown by Figure 4 (National Centers for Environmental Information, 2015). Degrees west for magnetic declination are negative and degrees east are positive. Using a magnetic declination field calculator from NOAA (http://www.ngdc.noaa.gov/geomag-web/), the magnetic declination of both control points in our study area was determined to be 1.08°W ± 0.39°, changing by 0.06° W per year.

|

| Figure 4: This figure, provided by NOAA (http://www.ngdc.noaa.gov/geomag/icons/case1.gif), demonstrates how to find the true bearing of a location. |

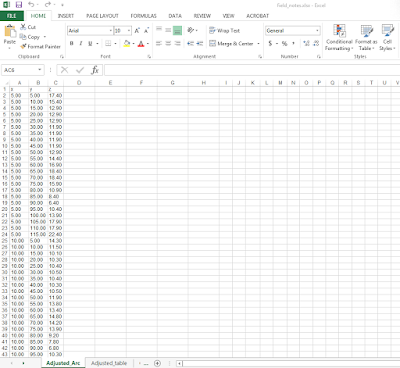

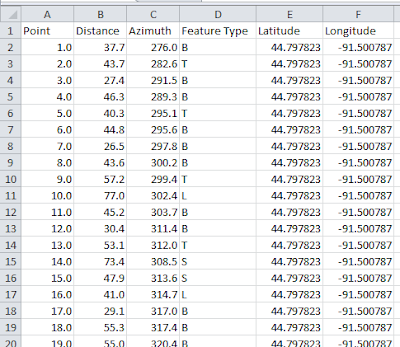

For each point we collected the point number, distance, azimuth, and feature type while in the field. We chose distance and azimuth because we were required by the exercise and they were necessary to determine the relative locations of objects on the campus mall. We chose to collect feature type because we wanted to inventory the resources on the campus mall. Also, point number was recorded for each object so objects could be distinguished individually in ArcMap 10.3.1. Once we were done collecting in the field, the data was input into an Excel spreadsheet (Figure 5).

|

| Figure 5: The Excel spreadsheet was used for manipulation in ArcMap 10.3.1. |

The coordinates for the two control points were determined by using Google Earth and put into the table. All points collected from one control point were the same latitude and longitude as the control point.

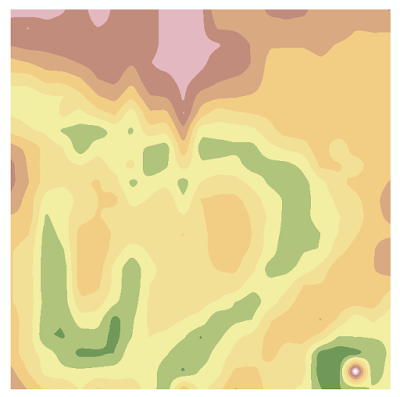

The spreadsheet was then imported into a geodatabase. We later discovered that ArcMap 10.3.1 preferred a text delimited table instead of a xml Excel spreadsheet. Once the spreadsheet was in the geodatabase, the Bearings Distance to Line Tool was used to transform the azimuth and distance readings to line features. This can be seen by the green lines in Figure 6. Next, we used the Feature Vertices to Points Tool to create a point feature class of the data based off the vertices created by the Bearings Distance to Line Tool feature class. This can be seen by the purple points in Figure 6. Both the Bearing Distance to Line Tool and the Feature Vertices to Points Tool are located in the ArcToolbox under Data Management Tools and Features.

To continue, we brought an aerial image of Eau Claire into our map from the EauClaireCity database (image 2709_29NW). We ran into problems again because the data points and bearings did not show up in the map at all. We determined the coordinate system was the problem because we were using the local coordinate system NAD_1983_HARN_WISCRS_EauClaire_County_Feet, which is not very common. The coordinate systems of the data and map were all projected to WGS84, using the Project Tool, located in the ArcToolbox under Data Mangement Tools, then Projections and Transformations. This coordinate system worked, and the reason why it worked was probably because it was a very common coordinate system.

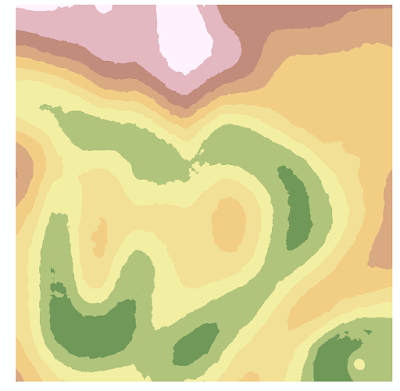

Finally, we compared the collected bearings and points of the objects to the location of the objects in the Eau Claire imagery to assess the accuracy of the survey. Inaccuracies were found, but it was a decent fit (Figure 6). The data was then used to make a map showing the different objects located on the UW-Eau Claire campus mall (Figure 7). Metadata for the final feature class created using the Feature Vertices to Points Tool can be seen in Figure 8. Collection of data in the field can be seen in Figure 9.

|

| Figure 6: Bearings and points are shown in the image by the green lines and purple dots, respectively. |

|

| Figure 7: Different features can be seen on the landscape in this map |

|

| Figure 8: Metadata for the final feature class in the dataset. |

|

| Figure 9: Niklas Anderson (partner) uses the TruPulse 200 laser distance finder to survey objects from the second control point near Centennial Hall on the UW-Eau Claire campus mall. |

Discussion:

After analyzing the accuracy of the survey with the aerial image, we realized most of the points were mostly off by a small amount. This was most likely due to the fact this was all implicit data; meaning the geographic locations of the objects were all relative to each other. Looking at Figure 6, most of the surveyed points for the objects in the center of the campus mall are very close to their object in the aerial image. There are some points that are very off though. For example, there is one point on top of Schofield Hall, which is the building at the top of the image in Figure 7. Also, there is a point on top of McIntyre Library, which is the building on the left of the image (Figure 7). These errors are most likely due to operator error in measuring distance. When using the laser distance finder, it was sometimes hard to aim the small target in the lens of the finder on an object far away. For the two points that are on top of the rooftops it is likely that we accidentally aimed the laser distance finder at an object behind the intended target, which would result in a distance further away than reality It should also be taken into consideration that it was tiring to hold and press the buttons on the finder for an extended period of time. This could lead to missed points.

These positional errors do not make the data useless. The purpose of this survey was to determine the relative locations of objects on the UW-Eau Claire campus mall so that the objects could be accurately mapped. This map could then be used by anyone looking to get a better understanding of the objects in the area. People that would find this information useful would include surveyors, landscape designers, and university staff in charge of tracking the resources on campus. Since the survey was relatively accurate, the data still made a good map for inventorying the UW-Eau Claire campus mall. Instances of when extreme accuracy would be needed would be mapping gas wells. The locations of gas wells would be very important to know accurately when drilling in an area with gas wells.

Overall, the distance azimuth survey is a useful tool for mapping features of a particular area. Example of professionals that would find distance azimuth surveys useful would be foresters inventorying a section of forest or a wildlife biologist mapping the population of a specific species.The latest piece of technology for conducting distance azimuth surveys is a total station, which uses an electromagnetic distance measuring instrument and electronic theodolite (Figure 10) to measure distance and angles (The Constructor, 2015)

|

| Figure 10: A total station in use (http://api.ning.com/files/QHx93MkxAp4Yfb8mAmRNvO-zFW-cayZTnHxd2qBwXBUMMfE3vvVfOx-Y7b5eCDg6VwlU6iCuglSKJ6I*HfZ5JeMZaHS*2ZS3/TrimbleTotalStation.JPG) |

Conclusion:

The exercise provided a

great experience working with technology that is used by many professionals to

survey the land. We also gained experience trouble shooting issues that arose

when working with our collected data. For example, we had reproject our data

from the local coordinate system to WGS84 for the aerial image of the study

area and our data to project together. Our work produce a useful map of the UW-Eau Claire campus mall that can be used to inventory the campus mall's resources. Overall, the field exercise developed useful skills that will help better

prepare us for the geospatial workforce.

Works Cited:

Laser Technology Inc. (2015a). "How Lasers Work". Retrieved from: http://www.lasertech.com/How-Lasers-Work.aspx?s=1

Laser Technology Inc. (2015b). "Measuring Azimuth". Retrieved from: http://www.lasertech.com/Laser-Measure-Azimuth.aspx .

National Centers for Environmental Information. (2015). "Magnetic Declination". Retrieved from:http://www.ngdc.noaa.gov/geomag/declination.shtml.

The Constructor. (2015). "Total Station-Operation, Uses & Advantages". Retrieved from: http://theconstructor.org/surveying/total-station-operation-uses-advantage/6605/.